Add blacklist nouveau to the end of the /etc/modprobe.d/nf file.

#Nvidia cuda toolkit vm driver#

If the Nouveau driver has been installed and loaded, perform the following operations to add the Nouveau driver to the blacklist to avoid conflicts:

#Nvidia cuda toolkit vm install#

#Nvidia cuda toolkit vm how to#

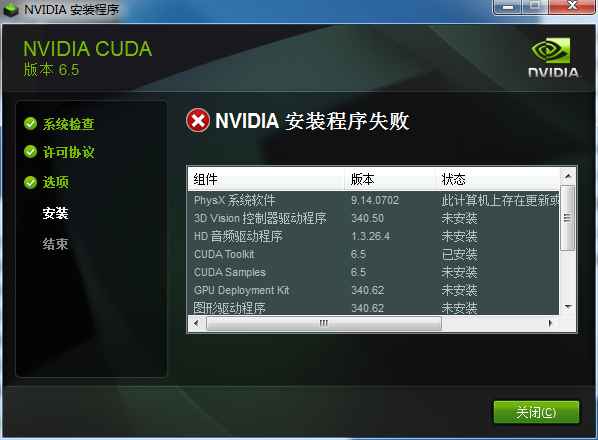

Please check that you have an NVIDIA GPU and installed a driver from http:/ /QuestionĬan you please advise how to solve this NVIDIA driver issue? Our deployment spins up a GPU VM on-demand as inference requests arrive, thus ideally the A100 VM on GCP already has an Nvidia Driver pre-installed to avoid latency. RuntimeError: Found no NVIDIA driver on your system. Return self._apply( lambda t: t.cuda(device))įile "/opt/conda/lib/python3.8/site-packages/torch/nn/modules/module.py", line 578, in _applyįile "/opt/conda/lib/python3.8/site-packages/torch/nn/modules/module.py", line 601, in _applyįile "/opt/conda/lib/python3.8/site-packages/torch/nn/modules/module.py", line 688, in įile "/opt/conda/lib/python3.8/site-packages/torch/cuda/_init_.py", line 215, in _lazy_init Please see stack trace below: File "service.py", line 223, in download_modelsĬonfig = model_name_function_mapping(model).eval().cuda()įile "/opt/conda/lib/python3.8/site-packages/pytorch_lightning/core/mixins/device_dtype_mixin.py", line 128, in cudaįile "/opt/conda/lib/python3.8/site-packages/torch/nn/modules/module.py", line 688, in cuda However, my Python script that loads models to CUDA still errored out with RuntimeError: Found no NVIDIA driver on your system.įor context, this boot disk storage container (including Python script) runs successfully on GCP P100, T4, V100 GPUs on GCP. NVIDIA Driver Error: Found no NVIDIA driver on your system Off | 00000000: 00: 04.0 Off | 0 | | N /A 34C P0 52W / 400W | 0MiB / 40960MiB | 2 % Default | | | | Disabled | + -+-+-+ + -+ | Processes: | | GPU GI CI PID Type Process name GPU Memory | | ID ID Usage | | = | | No running processes found | + -+

ECC | | Fan Temp Perf Pwr:Usage /Cap | Memory -Usage | GPU -Util Compute M. 01 CUDA Version: 11.7 | | -+-+-+ | GPU Name Persistence -M | Bus -Id Disp.A | Volatile Uncorr. Then verified the CUDA driver is installed by: $ nvidia -smiįri Oct 21 10: 03: 18 2022 + -+ | NVIDIA -SMI 515.65. Therefore, I’ve manually installed a CUDA driver by searching on: Make sure that the latest NVIDIA driver is installed and running. There seems to be an NVIDIA driver issue in the A100 40GB VM instances that I spin up in GCP Compute Engine with a boot disk storage container, since `nvidia-smi` when SSHing in a new instance returns: NVIDIA-SMI has failed because it couldn 't communicate with the NVIDIA driver. Nvidia-smi Error: NVIDIA-SMI has failed because it couldn’t communicate with the NVIDIA driver

0 kommentar(er)

0 kommentar(er)